I’m a sports fan, and lately my Instagram feed has been popping with NFL coaches giving oddly candid comments in postgame press conferences.

Here’s embattled Miami Dolphins coach Mike McDaniel after a loss to the Bills:

Green Bay Packers coach Matt LaFleur had similarly tart remarks after a loss to the lowly Cleveland Browns. Yesterday I saw Yankees outfielder Aaron Judge dropping f-bombs after a playoff game.

These are, of course, fake videos produced using generative AI (GenAI) tools.

AI video creation took a big leap forward this week as OpenAI released Sora 2, a new social feed where users can create and share 10-second AI videos. Although Sora 2 is still invite-only, the app download rate averaged 100,000 per day from Oct. 1 to Oct. 6.

That’s massive adoption. Hyper-realistic AI slop is about to overtop the dam. The implications for society are profound. The old rules of visual evidence no longer apply.

How do we cope? The first time I saw the Dolphins coach video it completely fooled me. The experience led me to look into the ways in which AI is collapsing our personal, social, political, and legal rules of evidence—and how we can adapt to avoid being duped.

Take heart; we’ve done this before

Today’s collapse of video evidence feels especially dramatic because we’ve been living through a period in which CCTV and smartphone video offered us the illusion of conclusive proof. Finally white people were viewing evidence of the ‘driving while black’ traffic stops we’d been told about (but often didn’t believe). Juries could see, with their own eyes, perpetrators caught on camera robbing the store.

This period ran roughly from the Rodney King video (1991) to the Diddy hotel hallway surveillance tape (2024).

And now it’s over.

Before you wail and gnash, it’s helpful to remember we’ve survived earlier versions of this. Adobe Photoshop has existed since 1991. In the 1930s, Stalin had his enemies erased from photos with a scalpel, glue, and airbrushed paint. In the 1900s, Edward S. Curtis staged ‘documentary’ photos of Native Americans using traditional clothing that wasn’t even from their region, let alone their tribe.

The camera has always lied. We just didn’t realize it.

AI and the liar’s dividend

The collapse of video evidence hits especially hard here in America, where Fox News and Donald Trump have spent the past decade undermining public confidence in all evidence everywhere.

Sociologists have a term for this dynamic: The liar’s dividend. When so much bullshit floods the zone, a reasonable person has little choice but to disbelieve everything. And so the liar enjoys his dividend: His falsehoods have diluted the value of truths sufficient to render them equal.

This is where we’ve arrived in 2025. The old dynamics have flipped. Instead of assuming visual evidence is real and then weeding out the fakes, a reasonable person now must assume everything is fake and demand proof of authenticity. Are you really my daughter calling me for help? Prove it.

Enjoy what you’re reading?

Become an AI Humanist supporter.

First step: Know the state of AI video generation

In AI circles there’s a famous benchmark of progress in GenAI video. It’s Will Smith eating dinner. Don’t ask why; nobody knows.

This is how fast the technology has evolved in just two years. Trust me when I say you’ll want to fast-forward through this thing.

We’ve gone from “I can spot AI every time” to “there’s no way to tell” faster than milk spoils. The guardrails have fallen. In April 2024, Microsoft researchers unveiled VASA-1, a breakthrough text-to-video creator that Microsoft chose not to release it for public use, due to its potential for abuse and deception.

Eighteen months later, that sensible caution feels like a quaint gesture from a bygone age.

With the limited release of Sora 2, OpenAI insists “this app is made to be used with your friends,” not as a machine to mass-produce deepfake disinformation. At a certain level the company is complying with state Right of Publicity laws by not allowing users to create fake videos of Taylor Swift. But…

But many others are not.

Be aware of bad actors

Clonos is the company whose AI tools produced the video of the Dolphins coach posted above. It’s one of thousands of AI startups embracing a no-rules, zero-ethics mentality. For $10 a month you can create similar videos—no guardrails, no limits, no questions asked.

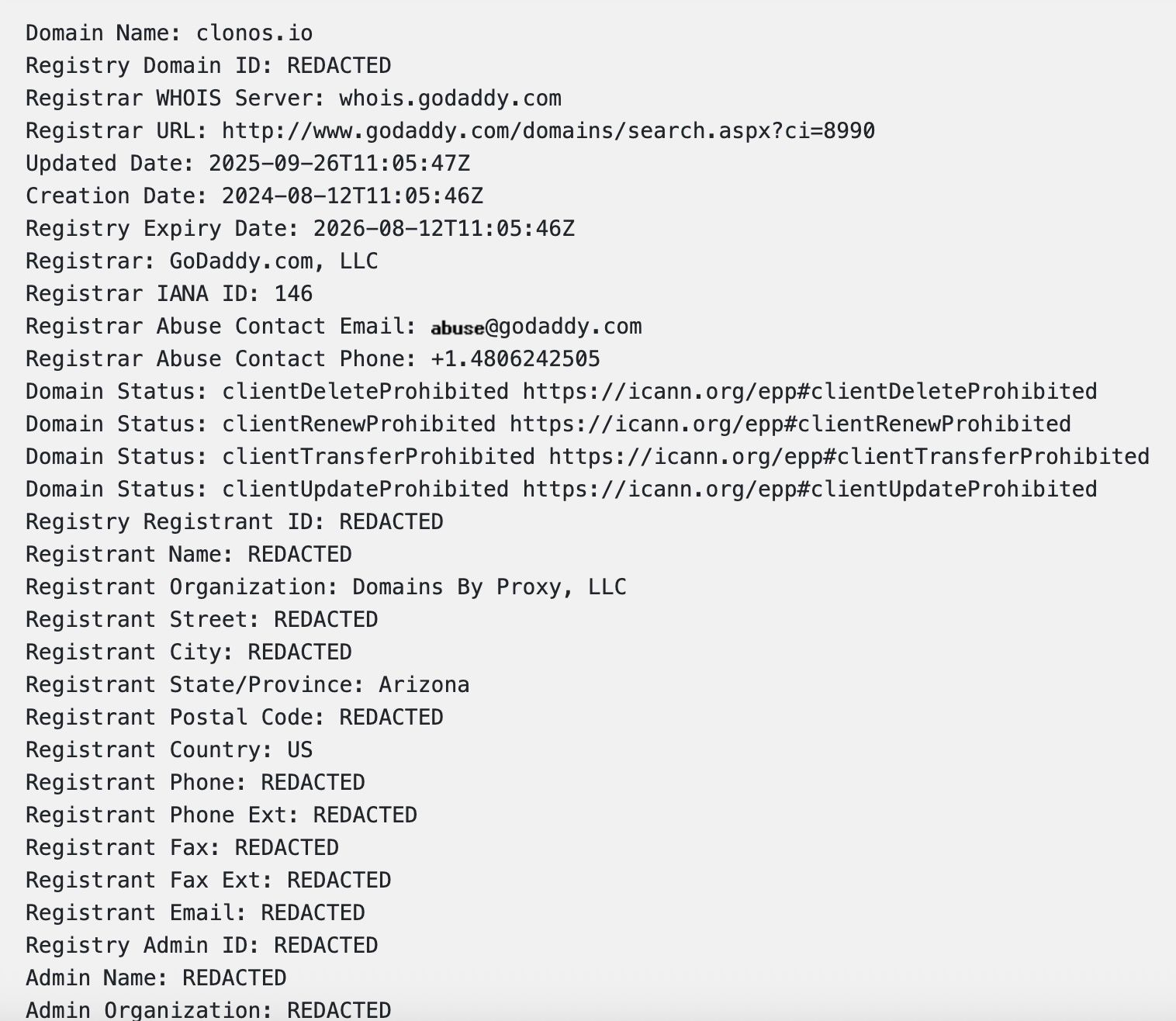

Who’s behind Clonos? Good question. The Clonos.io domain was registered by a third party service called Domains By Proxy, an Arizona company founded by GoDaddy billionaire Bob Parsons. Domains By Proxy is notoriously known as the fraudster’s best friend, the go-to service for every company looking to hide from public view. When you go digging for Clonos’ corporate information, this is what you find:

Clonos is by no means the worst. Below them things turn vile quickly, with dark web companies offering apps that turn innocent snapshots into explicit deepfake porn.

Know that technological solutions exist

The ability to embed authenticity-verification tools within images and video already exists. The big tech companies simply don’t want to use it.

A few good actors are out there. Adobe, for instance, embeds digital watermarks within outputs created with its Firefly text-to-video tool.

The truth is that OpenAI, Google, Meta, Microsoft, Apple, and Amazon have spent spending hundreds of millions of lobbying dollars to defeat measures that would require them to embed this provenance in their products.

Instead, we have to do the work. Here are some tips to get started.

Withhold judgment

In the digital economy your attention, your judgment, and your emotional response are tokens of value. Stop giving them away for free. No more reflexive sharing.

To paraphrase my favorite pair of defense attorneys: Slow the fuck down. Withhold judgment. Wait. Digital evidence requires your inner truth detector to reject Malcolm Gladwell and his spun-up Blink theory. Your initial reaction is often wrong.

Gather context

Ask who’s pushing or publishing the video. Click around for sourcing. (It took me about three clicks to find the Clonos source for the Dolphins coach video.) Ask yourself:

Is this highly unlikely?

Is there a point of view being pushed?

Is there an ask attached? (Money, share, follow, email address, etc.)

Check against mainstream sources

If a well-known figure is doing something outrageous on video, check with slower mainstream sources to see if they’re covering it.

Think old school: USA Today, New York Times, Wall Street Journal, Seattle Times, ESPN, The Athletic, the Associated Press. Not click farms like the Daily Mail or Times of India.

If the thing actually happened, these outlets will report it—but it will take time. Because it takes time and human effort to verify authenticity, check sources, and gather quotes from living humans.

Trust me, I’ve done this for a living. We all have legitimate complaints about mainstream media but 99% of reporters work hard to deliver accuracy. If they don’t they will lose their jobs.

I figured out the Dolphins coach video wasn’t real because ESPN and sportsradio didn’t pick up on it. They’re desperate for material; they’d never pass up that red meat. When video of a Nazi kid disrupting a University of Washington class went social last week, it took 24 hours for the Seattle Times to verify it. But they did—and provided full context.

Consult local sources

This encompasses everything from regional newspapers to neighborhood blogs. In my city I hit up the Seattle Times, The Stranger, Capitol Hill Seattle Blog and West Seattle Blog.

Social media and cable news float in the digital ether. They’re not grounded in an actual place. Geographically-rooted media is produced by humans who live and connect with local sources. They know when the national media is spitting crap about their city.

Do you believe Portland is a lawless city on fire? Check with the Oregonian, the Portland Mercury, or Willamette Week.

Wondering about that Mark Sanchez stabbing/arrest in Indianapolis? Read how the Indy Star is reporting the story.

Check with other humans

If you’re unsure (and you should be), check with friends and family—especially the young ones. It’s a simple question. “Did you see that video of…?”

Often the response will be, “Yeah, another crazy AI video,” or “I read about that scam last week, you don’t owe any overdue fast-lane tolls.”

In all things: Seek broader context, diverse inputs, and move slower than you think you should. As my mother continues to tell me: Think twice, act once. Authentic evidence still exists in the world. We just need to grow and adapt to recognize it.

Further reading on AI and evidence

Go deeper on this subject:

The Liar’s Dividend: Can Politicians Claim Misinformation to Evade Accountability?, Kaylyn Jackson Schiff et al, Yale Institution for Social and Policy Studies, 2024.

AI evidence in jury trials: Navigating the new frontier of justice: Thompson Reuters article on the challenges AI introduces into the legal system.

Just how good is Sora 2? PC Mag takes the new GenAI tool for a test drive.

Sora 2 is an unholy abomination: Skepticism from Vox.

Adobe’s Firefly, C2PA, and Content Credentials: Primer on the GenAI tools that embed watermarks intod digital output.

Database of AI hallucinations submitted in court filings: Lawyers gonna use ChatGPT to write their briefs. Chatbots gonna hallucinate. Caveat emptor.

Enjoy what you’re reading?

Become an AI Humanist supporter.

MEET THE HUMANIST

Bruce Barcott, founding editor of The AI Humanist, is a writer known for his award-winning work on environmental issues and drug policy for The New York Times Magazine, National Geographic, Outside, Rolling Stone, and other publications.

A former Guggenheim Fellow in nonfiction, his books include The Measure of a Mountain, The Last Flight of the Scarlet Macaw, and Weed the People.

Bruce currently serves as Editorial Lead for the Transparency Coalition, a nonprofit group that advocates for safe and sensible AI policy. Opinions expressed in The AI Humanist are those of the author alone and do not reflect the position of the Transparency Coalition.

Portrait created with the use of Sora, OpenAI’s imaging tool.