Here at the AI Humanist we work to keep you updated on stories worth reading without cluttering up your life. We sort the pile so you don’t have to. Here’s a rundown of the interesting chatter happening right now.

1. Leave Grandpa in the ground.

There’s an entire genre of GenAI startups racing to reanimate your dead beloveds. Miss your mother? Want to talk with your grandpa one more time? Submit an old photo, type in a few prompts, and voilà! The Talking Dead.

Washington Post columnist Monica Hesse gave these apps a test run a couple days ago. The results were…creepy.

“I don’t know how else to describe it,” she wrote, “but were you unfortunate enough to have watched the Netflix show about serial killer Ed Gein, in which he puts on a mask made of a dead woman’s skin? It was that.”

Okay, but under the Will Smith Spaghetti Principle we know AI verisimilitude will improve, and quickly. What then?

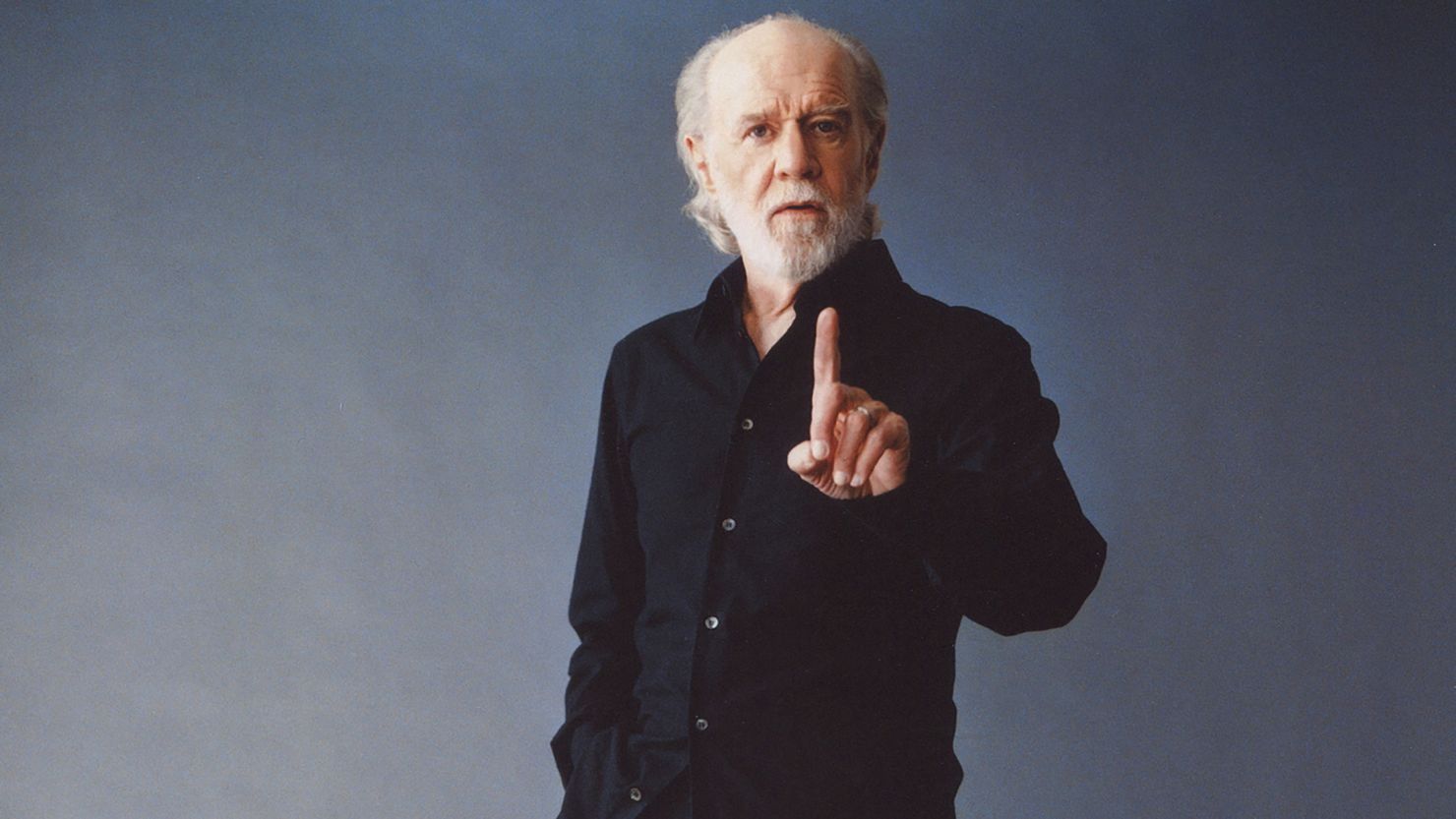

Hesse spoke with Kelly Carlin, the daughter of George Carlin. GenAI clips of the late comedian have been clogging her social feed for months. They’re high quality. She hates it. “It’s really disturbing to see your dead father resurrected by a machine.” The daughters of Robin Williams and Martin Luther King, Jr., said the same thing: Stop it.

Just as correlation doesn’t imply causation, can doesn’t imply should. Maybe we need to consider the profound role death and memory play in the formation of our selves before creating new false memories.

Enjoy what you’re reading?

Become an AI Humanist supporter.

2. California just enacted America’s first AI chatbot law. Tech companies hate it so you know it’s good.

Too often I hear colleagues rage against AI and sigh in resignation over the inevitable robot takeover. As a person who’s in the AI policy world, let me tell you: We are not powerless.

Three days ago California Gov. Gavin Newsom signed SB 243, America’s first AI chatbot safety law. My colleagues at the Transparency Coalition worked hard to get this passed. So did Megan Garcia, the mother of Sewell Setzer, the 14-year-old who killed himself last year after his seduction by a Character AI chatbot.

The new law isn’t perfect—none is—but it’s a solid first step. AI chatbots must now include active protocols to detect and respond to instances of suicide ideation. When the user is a minor (18 or younger), the chatbot must disclose that the chatbot is AI and not a real human—and must repeat the disclosure every three hours.

Also: When a kid’s talking with the bot, the bot cannot produce GenAI porn or encourage the kid to engage in sexually explicit conduct. The fact that this must be written into the law says a lot about the ethics of tech companies in 2025.

The new law comes during the same week that OpenAI CEO Sam Altman announced a lessening of restrictions on his company’s GenAI content: “As part of our ‘treat adult users like adults’ principle, we will allow even more, like erotica for verified adults,” he said on X.

3. Age verification innovation: Start with device, not app.

Treat adults like adults: 100%. And also maintain sensible restrictions for kids. We don’t let children drive cars, buy alcohol, or get married. There are good reasons for these barriers.

The trick in the digital world is how to age-gate the kids. I’m deep into a research project on new AI laws and yesterday I ran across one that’s gotten near-zero publicity but could make a real difference in keeping kids safer online.

California’s Digital Age Assurance Act, signed into law on Monday, requires digital device makers to embed age-verification systems within any product sold after Jan. 1, 2027. When an account is set up on a new smartphone or laptop, the user must indicate their birth date or age. This allows the iPhone or Android app store to automatically age-verify prior to download. It’s a smart way to go and more states should adopt similar laws in 2026.

4. “Dearest Claude, I come to you seeking assistance with a most delicate issue…”

A Lady Writing (detail), by Johannes Vermeer, c. 1662-1667

In a paper published earlier this month, researchers found that talking to an AI chatbot in more formal language increases the accuracy of its responses.

The authors found that people tend to downgrade their language when interacting with Claude, ChatGPT and other AI chatbots. This may be a holdover from our experience with Google, which trained us to clip our requests into keywords (“Taylor Swift tickets Seattle”) for efficiency.

The most popular chatbots are LLMs—large language models—which means they’re trained to make predictions based on previously ingested patterns of words. Ie, grammatically correct sentences. Better clarity and formal precision in your chatbot prompts will result in answers more closely matching what you seek.

5. Productivity may be the pin that pops the AI investment bubble.

Eryk Salvaggio, a visiting professor at RIT’s Humanities, Computing, and Design program, has a good piece in Tech Policy Press this week about Generative AI’s Productivity Myth.

In Oct. 2025 we’re still in the white-hot phase of AI investment. Venture capitalists are showing up at the ER with strained rotator cuffs from throwing so much cash at AI startups. Corporate AI investment topped $250 billion in 2024, with private investment climbing 45% over 2023.

All of this exuberance may or may not be irrational—but it’s based on the notion that AI investments will pay off for companies in the form of significant productivity gains. On the ground that means in a five-person department you fire four staffers and tell the sole survivor to use AI to keep those KPIs up and to the right.

So what happens if those productivity gains don’t arrive? Or are smaller than anticipated?

Salvaggio points out that studies of AI productivity gains have so far found limited yields. The benchmark Stanford HAI 2025 AI Index Report disclosed that 78% of organizations report using AI, with 71% using generative AI.

Here’s the key piece of data hidden in the Stanford HAI report:

“Most companies that report financial impacts from using AI within a business function estimate the benefits as being at low levels.”

How low? Only half of companies using AI reported cost savings, and those cost savings averaged less than 10%. Marketing and sales departments were most likely to report revenue gains, but “the most common level of revenue increase was less than 5%.”

I’ll write more on this later, but I want to share this for now: I rode out a previous wave of smaller but similar investment exuberance. In 2015, risk-hungry investors were convinced the newly-legal cannabis industry was about to create a new generation of billionaires. That bubble burst—and the investment cash disappeared—on Oct. 17, 2019. That was the one-year anniversary of the opening of Canada’s legal cannabis market. At the one-year mark, investors took stock. They saw that few legal Canadian cannabis companies were actually turning a profit. That augured poorly. If you couldn’t make money in a friendly federally legal market like Canada, where could you make money? Investors pulled their cash and fled cannabis entirely.

The Stanford report noted, cautiously, that “most companies are early in their journeys.” But if more significant AI-led productivity gains don’t come fairly soon it’ll start to become more difficult for CEOs to justify investments in systems that don’t pay off.

Enjoy what you’re reading?

Become an AI Humanist supporter.

What I’m reading this week

An off-the-cuff list meant to spark new ideas and interests.

Dark Renaissance: The Dangerous Times and Fatal Genius of Shakespeare’s Greatest Rival, by Stephen Greenblatt. The author of The Swerve and Will in the World returns with another historical ripper. This biography of the playwright Christopher Marlowe immerses you in Elizabethan England. Political-religious intrigue and bawdy pursuits abound. I’m totally digging it.

Take Your Eye Off the Puck: How to Watch Hockey By Knowing Where to Look, by Greg Wyshnyski. The NHL season starts this week. This is a great intro to the game. Let’s go Kraken.

Divided locker room disastrous results: Players, parents blame Belichick culture for UNC problems, by Pat Welter, WRAL News. The first full report on Bill Belichick’s deliciously disastrous year coaching college football. Karma comes hard for the Hoodie.

MEET THE HUMANIST

Bruce Barcott, founding editor of The AI Humanist, is a writer known for his award-winning work on environmental issues and drug policy for The New York Times Magazine, National Geographic, Outside, Rolling Stone, and other publications.

A former Guggenheim Fellow in nonfiction, his books include The Measure of a Mountain, The Last Flight of the Scarlet Macaw, and Weed the People.

Bruce currently serves as Editorial Lead for the Transparency Coalition, a nonprofit group that advocates for safe and sensible AI policy. Opinions expressed in The AI Humanist are those of the author alone and do not reflect the position of the Transparency Coalition.

Portrait created with the use of Sora, OpenAI’s imaging tool.